What is libcamera and why should you use it

Read out a picture from camera

Once in a time, video devices was not that complex. To use a camera back then, your application software could iterated through /dev/video* devices and pick the camera that you want and then immediately start using it. You could query which pixel formats, frame rates, resolutions and all other properties that are supported by the camera. You could even easily change it if you want.

This still works for some cameras, basically every USB camera and most laptop cameras still works that way.

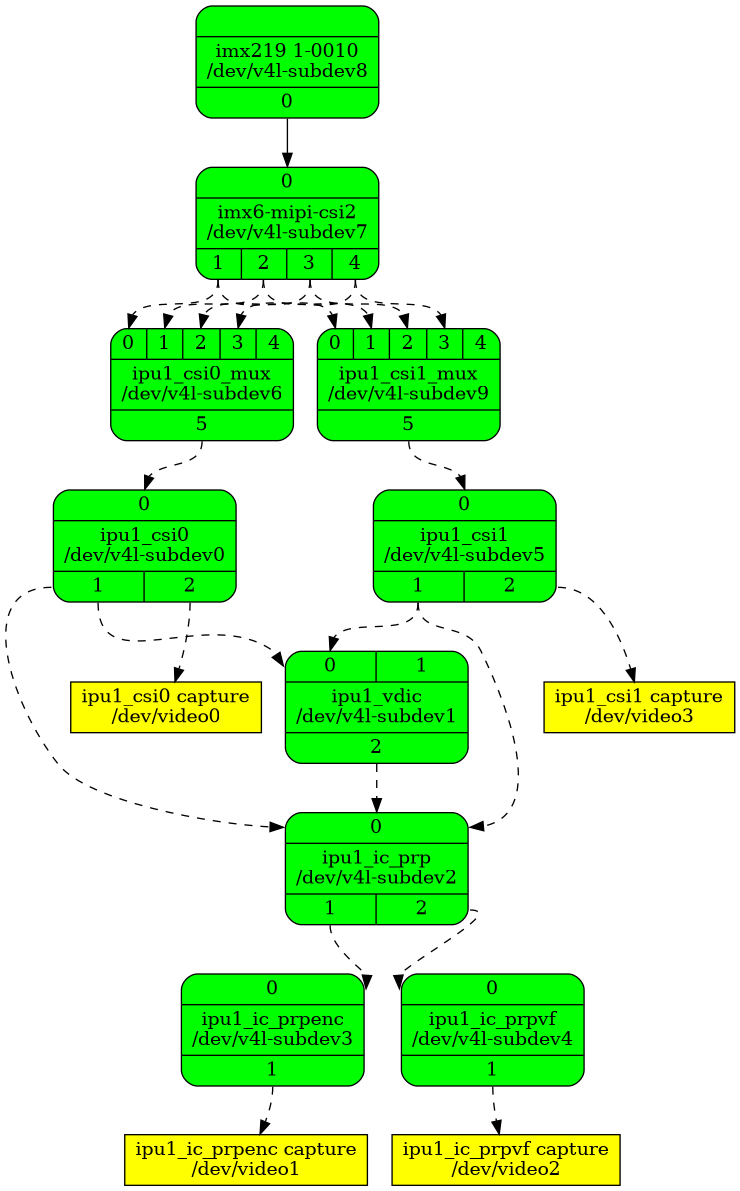

The problem, especially in embedded systems, is that there is no such thing as "the camera" anymore. The camera system is rather a complex pipeline of different image processing nodes that the image data traverse through to be shaped as you want. Even if the result of this pipeline will end up in a video device, you cannot configure things like cropping, resolution etc. directly on that device as you used to. Instead, you have to use the media controller API to configure and link each of these nodes to build up your pipeline.

To show how it may look like; this is a graph that I had in a previous post [3]:

What is libcamera?

This is how libcamera is described on their website [1]

libcamera is an open source camera stack for many platforms with a core userspace library, and support from the Linux kernel APIs and drivers already in place. It aims to control the complexity of embedded camera hardware by providing an intuitive API and method of separating untrusted vendor code from the open source core. libcamera aims to encourage the development of new embedded camera applications by limiting the complexity that developers have to deal with. The interface is designed around the way that modern embedded camera hardware works.

First time I heard about libcamera was on the Embedded Linux Conference 2019 where Jacopo Mondi had a talk [2] about the Public API for the first stable libcamera release. I have been working with cameras in several embedded Linux products and know for sure how complex [3] these little beast could be. The configuration also differ depending on which platform or camera you are using as there is no common way to setup the image pipe. You will soon have special cases for all your platform variants in your application. Which is not what we strive for.

libcamera is trying to solve this by provide one library that takes care of all that complexity for you.

For example, if you want to adjust a simple thing, say contrast, of a IMX219 camera module connected to a Raspberry Pi. To do that without libcamera, you first have to setup a proper image pipeline that takes the camera module, connect it to the several ISP (Image Signal Processing) blocks that your processor offers in order to get the right image format, resolution and so on. Somewhere between all these configuring, you realise that the camera module nor the ISPs have support for adjust the contrast. Too bad. To achieve this you have to take the image, pass it to a self-written contrast algorithm, create a gamma curve that the IPA (Image Processing Algorithm) understands and actually set gamma. Yes, the contrast is adjusted with a gamma curve for that particular camera on Raspberry Pi. ( Have a look at the implementation of that IPA block [7] for Raspberry Pi )

This is exactly the stuff libcamera understands and abstract for the user. libcamera will figure out what graph it has to build depending on what you want do to and which processing operations that are available at your various nodes. The application that is using libcamera for the video device will set contrast for all cameras and platforms. After all, that is what you wanted.

Camera Stack

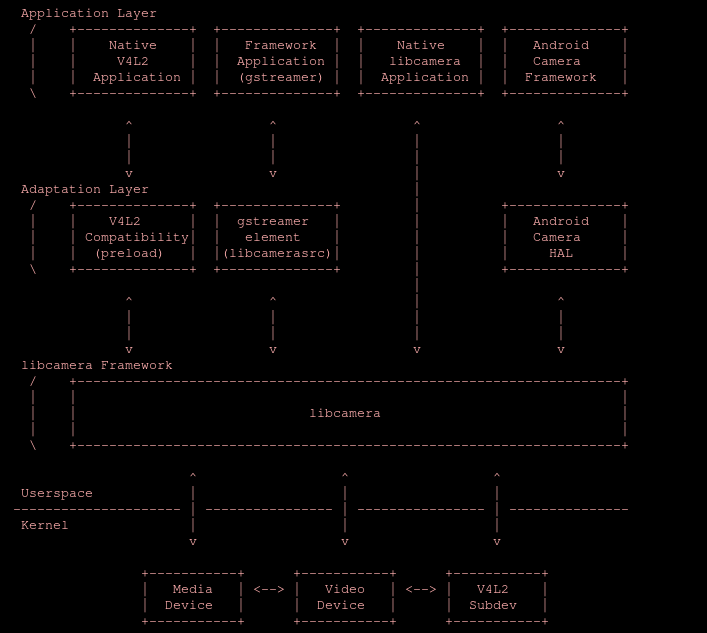

As the libcamera library is fully implemented in userspace and use already existing kernel interfaces for communication with hardware, you will need no extra underlying support in terms of separate drivers or kernel support.

libcamera itself exposes several API's depending on how the application want to interface the camera. It even have a V4L2 compatiblity layer to emulate a high-level V4L2 camera device to make a smooth transition for all those V4L2 applications out there.

Read more about the camera stack in the libcamera documentation [4].

Conclusion

I really like this project and I think we need an open-source stack that supports many platforms. This vendor-specific drivers/libraries/IPAs-situation we are in right now is not sustainable at all. It takes too much effort to evaluate a few cameras of different vendors just because all vendors has their own way to control the camera with their own closed-source and platform specific layers. Been there done that.

For those vendors that do not want to open-source their secret image processing algorithms, libcamera uses a plugin system for IPA modules which let vendors keep their secrets but still be compatible with libcamera. All open-source modules are identified based on digital signatures, while closed-source modules are instead isolated inside a sandbox environment with restricted access to the system. A Win-Win concept.

The project itself is still quite young and need more work to support more platforms and cameras, but the ground is stable. Raspberry Pi is now a common used platform, both in commercial and hobby, and the fact that Raspberry Pi Foundation has chosen libcamera as their primary camera system [8] must tell us something.

References

| [1] | https://libcamera.org/faq.html |

| [2] | https://www.youtube.com/watch?v=FovurKj28rw |

| [3] | (1, 2) https://www.marcusfolkesson.se/blog/v4l2-and-media-controller/ |

| [4] | https://libcamera.org/docs.html |

| [5] | https://git.libcamera.org/libcamera/libcamera.git |

| [6] | https://github.com/raspberrypi/libcamera-apps.git |

| [7] | https://git.libcamera.org/libcamera/libcamera.git/tree/src/ipa/raspberrypi/controller/rpi/contrast.cpp |

| [8] | https://libcamera.org/entries/2020-05-05.html |